Stockpile Historian: Industrial-Grade Time-Series Database System

A sophisticated real-time 3D volumetric modeling and time-series storage system for industrial materials handling operations

Executive Summary

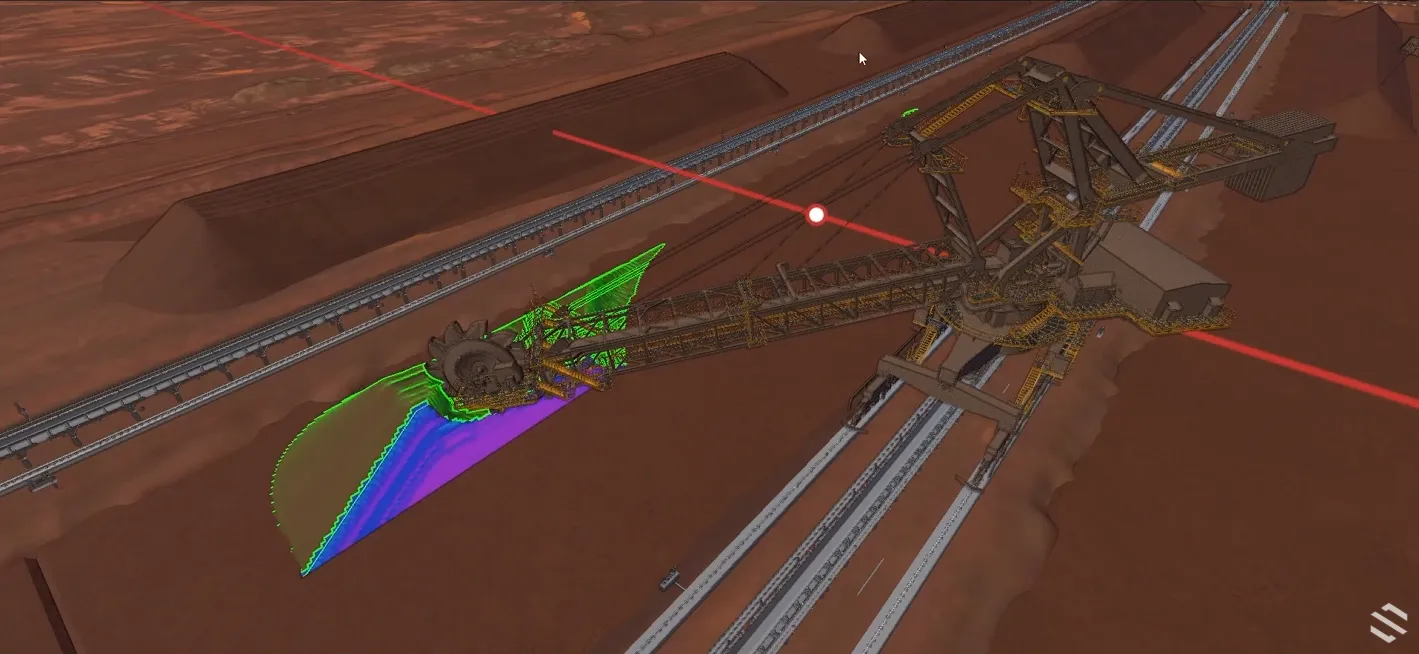

Stockpile Historian is a specialized time-series database system engineered to track, model, and serve volumetric stockpile data for mining and materials handling operations. Built in Rust for performance and reliability, it processes machine telemetry data in real-time to maintain accurate 3D voxel models of material stockpiles, enabling operational insights and historical analysis.

Key Capabilities

- Real-time 3D Volumetric Modeling - Maintains accurate voxel-based representations of stockpiles as material is deposited and reclaimed

- Multi-Source Data Ingestion - Connects to GE Proficy Historian, Excel spreadsheets, and other data sources via extensible ingestor architecture

- Physics-Based Simulation - Models material settling with angle of repose calculations and realistic material flow

- High-Performance Storage - DuckDB-based time-series storage with automatic database rotation and efficient querying

- Real-Time Event Streaming - gRPC services deliver stockyard changes to web clients with sub-second latency

- Material Property Tracking - Tracks ancillary properties (iron content, moisture) throughout the stockpile lifecycle

- Historical Replay - Time-travel capability to replay past operations for analysis and validation

Business Value

For Operations Teams: Provides accurate, real-time visibility into stockpile volumes, material locations, and machine operations, enabling better planning and resource optimization.

For Management: Offers historical analysis capabilities to identify trends, optimize workflows, and support data-driven decision making with reliable audit trails.

For Engineering Teams: Delivers a maintainable, well-architected system built on modern technologies with comprehensive observability and testing infrastructure.

System Architecture

High-Level Overview

The Stockpile Historian follows a microservices-oriented architecture with clear separation of concerns across data ingestion, processing, storage, and serving layers.

REST API] Excel[Excel Spreadsheets

Test Data] Other[Other Historians

Snowflake, etc.] end subgraph "Ingestion Layer" SPGE[sph-ge-proficy

GE Ingestor] SPEX[sph-excel

Excel Ingestor] SPIN[Custom Ingestors

Extensible] end subgraph "Historian Core" GRPC[gRPC Interface

Service] Ingestion[Ingestion Service

Processing Engine] SY[Stockyard Manager

Voxel Simulation] DB[(DuckDB

Time-Series Storage)] Broadcast[Broadcast Service

Event Broadcasting] end subgraph "Client Layer" Web[Web Applications

Visualization] API[API Clients

gRPC Streams] end GE -->|OAuth2/REST| SPGE Excel -->|DuckDB Import| SPEX Other -->|Custom Protocol| SPIN SPGE -->|gRPC Stream| GRPC SPEX -->|gRPC Stream| GRPC SPIN -->|gRPC Stream| GRPC GRPC --> Ingestion Ingestion -->|Machine Simulation| SY Ingestion -->|Write Events| DB Ingestion -->|Stream Events| Broadcast Broadcast -->|Real-time Stream| Web Broadcast -->|Real-time Stream| API DB -.->|Historical Query| Broadcast style Ingestion fill:#e1f5ff,color:#1a1a1a style SY fill:#e1f5ff,color:#1a1a1a style DB fill:#ffe1e1,color:#1a1a1a style Broadcast fill:#e1ffe1,color:#1a1a1a

Architectural Principles

Separation of Concerns

- Ingestors handle external connectivity and data transformation (separate processes)

- Historian Core manages business logic, voxel simulation, and data persistence

- Services provide client-facing APIs with event streaming and historical queries

Event-Driven Pipeline

- Asynchronous message passing between components using Tokio channels

- Loose coupling allows independent scaling and failure isolation

- Backpressure control prevents memory exhaustion under load

Immutable Time-Series Data

- Write-once semantics for historical records ensure data integrity

- Voxel states derived from machine operations (not directly written) guarantee consistency

- Revision tracking enables change detection and validation

Performance-First Design

- Written in Rust for memory safety without garbage collection overhead

- Efficient voxel algorithms using flat arrays and cache-friendly data structures

- Parallel processing where possible (database writes, client streaming)

Technology Stack

Core Technologies

Language & Runtime

- Rust 2021 Edition - Memory safety, zero-cost abstractions, fearless concurrency

- Tokio - High-performance async runtime for I/O-bound operations

- Cargo Workspace - Modular architecture with 9+ crates

Communication & APIs

- gRPC (Tonic) - Efficient binary protocol with bidirectional streaming

- Protocol Buffers - Strongly-typed message definitions for forward/backward compatibility

- REST - GE Proficy Historian integration via OpenAPI-generated client

Data Storage & Processing

- DuckDB - Embedded OLAP database optimized for analytical queries

- Apache Arrow - Columnar data format for efficient voxel storage

- R2D2 - Connection pooling for concurrent database access

Configuration & Observability

- YAML - Human-readable configuration with environment variable expansion

- OpenTelemetry - Distributed tracing and metrics collection

- Tracing/Tracing-Subscriber - Structured logging with configurable output

- Serde - Zero-copy serialization for configuration and data exchange

Math & Geometry

- Glam - SIMD-accelerated vector math for 3D calculations

- Geo - Spatial data structures and algorithms

Why These Technologies?

Rust was chosen for its combination of performance, safety, and modern language features. The mining industry requires systems that run 24/7 with zero tolerance for crashes or data corruption. Rust’s ownership system eliminates entire classes of bugs at compile time.

gRPC enables efficient bidirectional streaming between ingestors and the historian, and from the historian to web clients. Protocol Buffers ensure schema evolution without breaking existing clients.

DuckDB provides embedded OLAP capabilities without the operational overhead of a separate database server. Its columnar storage format aligns perfectly with time-series data access patterns.

Tokio allows handling thousands of concurrent operations without thread-per-connection overhead, critical for managing multiple ingestors and client connections simultaneously.

Core Subsystems

1. Voxel System (crates/voxel/)

The voxel system is the heart of the 3D modeling capability, maintaining accurate volumetric representations of stockpiles.

3D Grid Container] VD[VoxelData

Individual Voxel] Algo[Deposit/Excavate

Algorithms] Track[Change Tracker

Validation] end subgraph "Voxel Data" Rev[Revision Number

u32] Time[Updated Timestamp

DateTime] Fill[Fill Amount

0.0 to max] Ore[Ore Properties

Fe, Moisture, etc.] end subgraph "Algorithms" Cone[Cone Deposit

Simple Placement] Settle[Settle Deposit

Angle of Repose] Cyl[Cylinder Excavate

Reclaimer Pattern] end VM --> VD VM --> Algo VM --> Track VD --> Rev VD --> Time VD --> Fill VD --> Ore Algo --> Cone Algo --> Settle Algo --> Cyl style VM fill:#e1f5ff,color:#1a1a1a style Algo fill:#ffe1e1,color:#1a1a1a

Key Features

Voxel Data Structure

pub struct VoxelData {

revision: u32, // Monotonically increasing update counter

updated_at: DateTime<Utc>, // Last modification timestamp

fill_amount: f64, // Volume occupied (0.0 to voxel_capacity)

ore_properties: OreProperties, // Material characteristics (Fe%, moisture)

}Deposition Algorithm

- Cone Algorithm - Fast, simple vertical deposition

- Settle Algorithm - Physics-based with angle of repose calculations for realistic material flow

- Material “settles” downward and outward based on natural settling angles

- Blend ore properties when voxels receive material from multiple sources

Excavation Algorithm

- Cylindrical pattern matching reclaimer bucket wheel geometry

- Removes material layer by layer as the machine traverses

- Updates voxel states atomically to prevent partial updates

Validation & Integrity

- Strict validation mode (feature flag) checks all voxel updates stay within physical bounds

- Revision tracking detects concurrent modifications

- Change tracker accumulates updates for efficient database writes

Design Rationale: The voxel approach provides pixel-perfect accuracy for stockpile modeling. Storing 1m³ voxels allows tracking material properties at fine granularity while maintaining reasonable memory footprint (1 million voxels ≈ 64MB).

2. Stockyard System (crates/stockyard/)

The stockyard system manages the physical layout and simulates machine operations based on telemetry data.

Orchestrator] CM[Canyon Manager

Storage Areas] Machines[Machine Simulators

Stackers/Reclaimers] end subgraph "Canyon Components" Geo[Canyon Geometry

Bounds & Origin] VMap[Voxel Map

3D Grid] Empty[Empty Flag

Optimization] end subgraph "Machine Simulation" Stacker[Stacker Simulation

Deposition Calc] Reclaimer[Reclaimer Simulation

Excavation Calc] Lifecycle[Lifecycle Manager

Stockpile Bounds] end SYM --> CM SYM --> Machines CM --> Geo CM --> VMap CM --> Empty Machines --> Stacker Machines --> Reclaimer Machines --> Lifecycle style SYM fill:#e1f5ff,color:#1a1a1a style Machines fill:#ffe1e1,color:#1a1a1a

Machine Simulation Process

(slew, luff, travel, discharge) Ingestion->>StockyardMgr: Process Stacker Data StockyardMgr->>StockyardMgr: Calculate 3D Position

(geometry, boom length) StockyardMgr->>CanyonMgr: Deposit Material

(position, tonnes, properties) CanyonMgr->>VoxelMap: Run Deposition Algorithm VoxelMap->>VoxelMap: Update Affected Voxels VoxelMap-->>CanyonMgr: Changed Voxels List CanyonMgr-->>StockyardMgr: Update Result StockyardMgr-->>Ingestion: Stockyard Event Ingestion->>Ingestion: Send to DB Writer

& Event Stream

Coordinate System Management

The system handles three coordinate systems with automatic transformations:

- World Coordinates - Absolute positions in meters from site origin

- Canyon-Local Coordinates - Relative to canyon origin (0, 0)

- Voxel Indices - Discrete grid positions (i, j, k)

Machine Configuration Example:

stackers:

- name: Stacker-01

origin: [0, 1003.35] # World position

elevation: 6.0 # Height above ground

boom_length: 36.0 # Meters

throw_distance: 4.3 # Material trajectory

travel_vector: [1, 0] # Movement direction

slew_multiplier: -1.0 # Bearing conversion

slew_offset: 270.0 # North alignmentDesign Rationale: Separating machine simulation from voxel storage allows independent evolution of both subsystems. Machine configurations can be updated without changing voxel algorithms, and new machine types can be added by implementing the simulation interface.

3. Database Layer (crates/historian-db/)

The database layer provides persistent storage with a dual-database architecture optimized for different access patterns.

Stockyard Metadata] Pool2[Time-Series Pool

Current Window] end subgraph "Info Database" Meta[Stockyards

Machines

Canyons] SPMeta[Stockpile Metadata

Names, Bounds] end subgraph "Time-Series Database" Stacker[Stacker Data

Position, Discharge] Reclaimer[Reclaimer Data

Position, Excavation] Voxel[Voxel History

Changes Over Time] Lifecycle[Lifecycle Events

Stockpile Start/End] end subgraph "Database Rotation" Current[Current DB

db_time_0001.duckdb] Archive1[Previous DB

db_time_0000.duckdb] Archive2[Older DB

Read-Only Archive] end Pool1 --> Meta Pool1 --> SPMeta Pool2 --> Stacker Pool2 --> Reclaimer Pool2 --> Voxel Pool2 --> Lifecycle Pool2 -.->|Rotation| Current Current -.->|Daily/Weekly| Archive1 Archive1 -.->|Archival| Archive2 style Pool1 fill:#e1f5ff,color:#1a1a1a style Pool2 fill:#ffe1e1,color:#1a1a1a style Current fill:#e1ffe1,color:#1a1a1a

Key Features

Dual-Database Design

- Info Database - Static/slowly-changing metadata (stockyard layout, machine configs)

- Time-Series Database - High-frequency writes (voxel changes, machine telemetry)

Automatic Database Rotation

- Creates new time-series database files at configured intervals (hourly, daily, weekly)

- Prevents any single file from becoming too large

- Maintains separate sequence numbers for file naming

- Old databases remain available for historical queries

Asynchronous Write Pipeline

pub enum HistorianDbWriteMessage {

Batch(HistorianDbWriteBatch<VoxelWriteData>),

Batch(HistorianDbWriteBatch<StackerWriteData>),

Batch(HistorianDbWriteBatch<ReclaimerWriteData>),

Batch(HistorianDbWriteBatch<LifecycleWriteData>),

ClearCanyon(ClearCanyonWriteData),

}Database writes happen in a dedicated thread with bounded channel for backpressure control. This prevents memory exhaustion if writes can’t keep up with ingestion rate.

Schema Design

- Primary keys on (timestamp, machine_name) for machine data

- Voxel changes stored as deltas with revision numbers

- Apache Arrow format for efficient columnar voxel queries

- Indexes on timestamp ranges for fast historical retrieval

Design Rationale: Separating metadata from time-series data optimizes both for their access patterns. Metadata benefits from normalization and relationships; time-series data benefits from append-only writes and columnar storage. Database rotation keeps file sizes manageable for backup and prevents unbounded growth.

4. Ingestion Pipeline

The ingestion system features a pluggable architecture where separate processes handle different data sources.

.xlsx] DDB[DuckDB

In-Memory] Transform1[Data Transform

Validation] end subgraph "GE Proficy Ingestor" GE[GE REST API

OAuth2] Auth[Token Manager

Refresh] Transform2[Data Transform

Lifecycle] end subgraph "Historian Core" GRPC[gRPC Service

Bidirectional Stream] Entree[Entree Service

Processing] end Excel --> DDB DDB --> Transform1 Transform1 -->|gRPC Stream| GRPC GE --> Auth Auth --> Transform2 Transform2 -->|gRPC Stream| GRPC GRPC --> Entree style Transform1 fill:#e1f5ff,color:#1a1a1a style Transform2 fill:#ffe1e1,color:#1a1a1a style GRPC fill:#e1ffe1,color:#1a1a1a

Excel Ingestor (sph-excel)

Purpose: Test data ingestion from spreadsheets for development and validation

Features:

- Multi-sheet support for different machines

- Configurable ingestion speed (instant, real-time, multiplier)

- Timezone conversion and datetime parsing

- Validation (discharge rates, tonnage deltas, totalizer resets)

- Sample range selection for partial ingestion

- Dry-run mode for validation without sending data

DuckDB Integration:

-- Direct Excel import using DuckDB's read_xlsx function

SELECT timestamp, slew, luff, travel, discharge_rate, tonnes_stacked

FROM read_xlsx('data/stacker_log.xlsx', sheet='Sheet1')

WHERE timestamp BETWEEN ? AND ?

ORDER BY timestampGE Proficy Ingestor (sph-ge-proficy)

Purpose: Production data ingestion from GE Proficy Historian

Ingestion Modes:

-

Continuous Mode - Ongoing data collection for real-time operations

ingest_mode: !Continuous start_offset: !Minute 1 # How far back to start catchup_offset_minutes: 60 # Window for historical data -

Time Range Mode - Historical data ingestion for specific periods

ingest_mode: !TimeRange start_time: 2025-03-11T17:02:40Z end_time: 2025-03-12T14:58:40Z

Authentication:

- OAuth2 client credentials flow

- Automatic token refresh on expiration

- Secure credential storage via environment variables

Data Processing:

- Paginates large queries to avoid timeouts

- Maintains machine state between samples

- Queries backward for initial state before start time

- Filters invalid samples (NaN, infinite values)

- Implements retry logic for transient failures

Lifecycle Management:

- Retrieves stockpile lifecycle data (toe positions, tonnages)

- Detects when stockpiles are cleared

- Sends clear commands to historian to reset voxel maps

Design Rationale: Separating ingestors as independent processes provides fault isolation. If an ingestor crashes, the historian continues serving clients. Ingestors can be restarted independently, and new ingestors can be added without modifying the historian core.

5. Event Streaming & Client Services

The Broadcast service provides real-time event streaming to web applications and other clients.

(Voxel Changes) Broadcast->>Broadcast: Batch & Merge Events Broadcast->>Client: Stream Event Update end Note over Client,Database: Continuous bidirectional streaming

Ingestion Service - Data Processing

Responsibilities:

- Receives ingestor data via gRPC

- Validates field values (finite numbers, range checks)

- Processes machine telemetry through simulation

- Updates voxel maps based on machine operations

- Sends write messages to database thread

- Forwards events to Broadcast service for broadcasting

Processing Pipeline:

// Simplified processing flow

async fn ingest_stacker_data(data: StackerIngestData) -> Result<()> {

// 1. Validate input

validate_fields(&data)?;

// 2. Simulate machine operation

let deposition = stockyard.simulate_stacker(&data)?;

// 3. Update voxel map

let changes = stockyard.deposit_material(deposition)?;

// 4. Write to database (async)

db_writer.send(HistorianDbWriteBatch::Voxel(changes))?;

// 5. Stream to clients

event_sender.send(StockyardEvent::VoxelChange(changes))?;

Ok(())

}Broadcast Service - Event Broadcasting

Responsibilities:

- Manages client connections

- Sends initial state when clients connect

- Batches and merges events for efficiency

- Broadcasts updates to all connected clients

- Rate-limits event transmission

Event Batching:

- Collects events over a configurable time window (e.g., 100ms)

- Merges overlapping voxel changes to reduce message count

- Sends one batched update per window, reducing network overhead

Initial State Handling:

// When client connects, send complete current state

async fn handle_new_client(stream: &mut ResponseStream) {

// 1. Send stockyard layout

stream.send(InitialStockyardState { ... }).await?;

// 2. Send machine positions

stream.send(InitialMachineStates { ... }).await?;

// 3. Send current voxel heightmap

stream.send(InitialVoxelState { ... }).await?;

// 4. Start streaming real-time updates

subscribe_to_events(stream).await?;

}Design Rationale: The two-service architecture (Ingestion/Broadcast) separates data processing from client communication. Ingestion can focus on computation without blocking on client I/O. Broadcast handles all networking concerns including connection management, buffering, and rate limiting.

Design Patterns & Best Practices

Architectural Patterns

1. Pipeline Architecture (Pipes and Filters)

The system implements a clear data processing pipeline with distinct stages:

Ingest → Validate → Transform → Simulate → Store → StreamEach stage has a well-defined responsibility and communicates via message passing. This allows:

- Independent scaling of stages

- Easy testing of individual components

- Parallel processing where possible

Implementation: Tokio channels connect stages, with bounded channels for backpressure control where needed (database writes) and unbounded for event streaming.

2. Repository Pattern

Database access is abstracted behind well-defined interfaces:

pub trait HistorianDb {

fn write_voxel_changes(&self, changes: &[VoxelChange]) -> Result<()>;

fn query_machine_data(&self, start: DateTime, end: DateTime) -> Result<Vec<MachineData>>;

fn get_stockyard_layout(&self) -> Result<StockyardLayout>;

}Benefits:

- Database implementation can be swapped (though DuckDB is optimal for this use case)

- Easy mocking for unit tests

- Clear contract for data access

3. Strategy Pattern (Voxel Algorithms)

Multiple deposition algorithms are available, selectable via configuration:

pub enum VoxelDepositAlgorithm {

Cone, // Simple vertical deposition

Settle, // Physics-based angle of repose

}Benefits:

- Algorithm can be changed without code modification

- Easy to add new algorithms (e.g., advanced fluid dynamics simulation)

- A/B testing different approaches

4. Observer Pattern (Event Streaming)

The Broadcast service implements the observer pattern for broadcasting events:

pub struct EventBroadcaster {

subscribers: Vec<EventStream>,

}

impl EventBroadcaster {

pub async fn broadcast(&mut self, event: StockyardEvent) {

for subscriber in &mut self.subscribers {

subscriber.send(event.clone()).await.ok();

}

}

}Benefits:

- Dynamic client addition/removal

- Decouples event producers from consumers

- Supports multiple client types (web, API, logging)

5. Builder Pattern (Complex Configurations)

Stockyard setup uses builder pattern for complex initialization:

pub struct StockyardBuilder {

config: StockyardConfig,

database: Option<HistorianDb>,

// ... other fields

}

impl StockyardBuilder {

pub fn with_database(mut self, db: HistorianDb) -> Self { ... }

pub fn with_voxel_algorithm(mut self, algo: VoxelDepositAlgorithm) -> Self { ... }

pub fn build(self) -> Result<StockyardManager> { ... }

}Benefits:

- Step-by-step construction of complex objects

- Clear validation at build time

- Immutable result after construction

Code Organization Principles

1. Crate-Based Modularity

The workspace is organized into focused crates with clear boundaries:

crates/

├── voxel/ # 3D modeling (no I/O dependencies)

├── stockyard/ # Machine simulation (depends on voxel)

├── historian-db/ # Database access (depends on types)

├── types/ # Domain models (no dependencies)

├── ingestor-util/ # Shared ingestor code

└── observability/ # Logging and tracing setupBenefits:

- Fast compilation (only rebuild changed crates)

- Clear dependency graph prevents circular dependencies

- Easy to understand system boundaries

2. Error Handling Strategy

The codebase uses thiserror for custom error types with context:

#[derive(Debug, thiserror::Error)]

pub enum VoxelError {

#[error("Voxel index out of bounds: ({0}, {1}, {2})")]

OutOfBounds(usize, usize, usize),

#[error("Voxel overflow: attempted to add {attempted} to voxel with {current}/{max}")]

Overflow { attempted: f64, current: f64, max: f64 },

#[error("Invalid voxel state at ({x}, {y}, {z}): {reason}")]

InvalidState { x: usize, y: usize, z: usize, reason: String },

}Benefits:

- Self-documenting error conditions

- Easy to add context when propagating errors

- Plays well with

?operator andanyhowfor binary error handling

3. Type Safety for Domain Concepts

New types prevent common mistakes:

pub struct StockyardPosition { x: f64, z: f64 } // Not Vec2

pub struct VoxelIndex { i: usize, j: usize, k: usize } // Not (usize, usize, usize)

pub struct Tonnes(f64); // Not f64

pub struct Degrees(f64); // Not f64Benefits:

- Compiler prevents passing wrong coordinate system

- Self-documenting code (no ambiguity about units)

- Zero runtime overhead (newtype pattern)

Testing Strategy

Unit Tests

Each crate includes extensive unit tests for core algorithms:

#[cfg(test)]

mod tests {

use super::*;

#[test]

fn test_voxel_deposit_cone() {

let mut voxel_map = VoxelMap::new(10, 10, 10, 1.0);

let result = voxel_map.deposit_cone(5.0, 5.0, 100.0, properties);

assert!(result.is_ok());

assert_eq!(voxel_map.get_voxel(5, 0, 5).fill_amount, 100.0);

}

#[test]

fn test_voxel_deposit_overflow() {

let mut voxel_map = VoxelMap::new(1, 1, 1, 100.0);

// First deposit succeeds

voxel_map.deposit_cone(0.0, 0.0, 100.0, properties).unwrap();

// Second deposit should error (overflow)

let result = voxel_map.deposit_cone(0.0, 0.0, 50.0, properties);

assert!(matches!(result, Err(VoxelError::Overflow { .. })));

}

}Integration Tests

Full end-to-end tests validate the complete pipeline:

#[tokio::test]

async fn test_stacker_ingestion_pipeline() {

// 1. Start historian with test configuration

let historian = start_test_historian().await;

// 2. Send stacker data

let client = connect_ingestor_client(&historian).await;

client.send_stacker_data(test_data()).await.unwrap();

// 3. Verify voxel changes in database

let voxels = historian.query_voxels(canyon_id).await;

assert!(voxels.iter().any(|v| v.fill_amount > 0.0));

// 4. Verify events sent to clients

let events = historian.get_broadcast_events().await;

assert!(!events.is_empty());

}Test Scenarios

The tests/scenarios/ directory includes realistic test cases:

- baseline-reset - Handles totalizer resets gracefully

- no-bad-values - Validates clean data processing

- with-bad-values - Tests error handling for invalid data

- with-wrong-values - Verifies validation catches incorrect values

Performance Profiling

Benchmarks for critical algorithms:

#[bench]

fn bench_voxel_deposit_settle(b: &mut Bencher) {

let mut voxel_map = create_large_voxel_map();

b.iter(|| {

voxel_map.deposit_settle(50.0, 50.0, 1000.0, test_properties());

});

}Notable Technical Achievements

1. Zero-Copy Voxel Streaming with Apache Arrow

Voxel data is stored and transmitted using Apache Arrow’s columnar format, enabling zero-copy access:

pub fn create_voxel_record_batch(voxels: &[VoxelData]) -> RecordBatch {

let schema = Arc::new(Schema::new(vec![

Field::new("x", DataType::UInt32, false),

Field::new("y", DataType::UInt32, false),

Field::new("z", DataType::UInt32, false),

Field::new("fill_amount", DataType::Float64, false),

Field::new("revision", DataType::UInt32, false),

Field::new("timestamp", DataType::Timestamp(TimeUnit::Millisecond, Some("UTC".into())), false),

Field::new("fe", DataType::Float64, true),

Field::new("moisture", DataType::Float64, true),

]));

// Build columnar arrays directly from voxel data

RecordBatch::try_new(schema, arrays).unwrap()

}Benefits:

- Efficient serialization/deserialization (no memory copies)

- Interoperable with data science tools (Pandas, Polars, DuckDB)

- Compressed storage (columnar format compresses better than row-based)

2. Physics-Based Material Settling

The settle algorithm implements realistic material flow using angle of repose:

pub fn deposit_settle(&mut self, x: f64, z: f64, volume: f64, properties: OreProperties) {

let mut remaining = volume;

let mut current_layer = self.get_top_layer(x, z);

while remaining > 0.0 && current_layer > 0 {

// Try to fill current position

let filled = self.try_fill_voxel(x, z, current_layer, remaining, properties);

remaining -= filled;

if remaining > 0.0 {

// Material overflows, settle to neighbors based on angle of repose

let neighbors = self.get_settling_neighbors(x, z, self.angle_of_repose);

// Distribute remaining material proportionally

for (nx, nz, weight) in neighbors {

let settled = self.deposit_settle(nx, nz, remaining * weight, properties);

remaining -= settled;

}

}

current_layer += 1;

}

}Benefits:

- Realistic stockpile shapes matching physical behavior

- Handles complex multi-layer deposition

- Naturally creates sloped surfaces at configurable angles

3. Automatic Database Rotation with Zero Downtime

The system seamlessly switches to new database files without interrupting operations:

pub async fn check_and_rotate_database(&mut self) -> Result<()> {

if self.should_rotate() {

// 1. Create new database file

let new_db_path = self.next_database_path();

let new_pool = create_database_pool(&new_db_path)?;

// 2. Initialize schema in new database

initialize_time_series_schema(&new_pool)?;

// 3. Atomically swap pools (uses Arc for thread-safe sharing)

let old_pool = self.time_pool.swap(Arc::new(new_pool));

// 4. Wait for in-flight writes to complete on old pool

tokio::time::sleep(Duration::from_secs(1)).await;

// Old pool is dropped automatically when no more references exist

tracing::info!("Database rotated to: {}", new_db_path.display());

}

Ok(())

}Benefits:

- No downtime during rotation

- Bounded file sizes for easier backup and management

- Old databases remain accessible for historical queries

4. Bidirectional gRPC Streaming with Backpressure

The ingestion protocol uses streaming in both directions with flow control:

pub async fn handle_ingestor_stream(

&self,

mut stream: tonic::Streaming<IngestorMessage>,

) -> Result<Response<ResponseStream>> {

let (tx, rx) = mpsc::unbounded_channel();

tokio::spawn(async move {

while let Some(message) = stream.message().await? {

match message {

IngestorMessage::StackerData(data) => {

// Process data

let result = self.process_stacker_data(data).await;

// Send acknowledgment back to ingestor

tx.send(AckMessage {

sequence: data.sequence,

success: result.is_ok(),

}).ok();

}

// ... other message types

}

}

});

Ok(Response::new(ReceiverStream::new(rx)))

}Benefits:

- Ingestor knows when data is processed successfully

- Can retry failed messages

- Prevents overwhelming the historian with too much data

5. Graceful Degradation and Error Recovery

The system continues operating even when individual components fail:

pub async fn run_with_monitoring(mut self) -> Result<()> {

loop {

tokio::select! {

// Monitor ingestor processes

result = self.ingestor_monitor.wait_for_exit() => {

match self.config.ingestor_exit_action {

IngestorExitAction::Restart => {

tracing::warn!("Ingestor exited, restarting...");

self.restart_ingestor(result.ingestor_name)?;

}

IngestorExitAction::Shutdown => {

tracing::error!("Ingestor exited, shutting down...");

return Err(anyhow!("Ingestor failed"));

}

IngestorExitAction::Ignore => {

tracing::info!("Ingestor exited, continuing...");

}

}

}

// Continue serving clients regardless

_ = self.serve_clients() => {}

}

}

}Benefits:

- System remains available even if data ingestion stops

- Configurable failure handling for different deployment scenarios

- Comprehensive logging for debugging issues

6. Configuration-Driven Machine Geometry

Complex machine geometry is fully configurable without code changes:

stackers:

- name: Stacker-01

origin: [0, 1003.35] # World position

elevation: 6.0 # Height above ground

boom_length: 36.0 # Boom length in meters

throw_distance: 4.3 # Material lands ahead of boom tip

rotation: [0, 0, 0] # Euler angle rotation

travel_vector: [1, 0] # Moves along X axis

slew_multiplier: -1.0 # Reverses rotation direction

slew_offset: 270.0 # Bearing system alignment

rail_gradient_ratio: -0.003 # Track incline compensationBenefits:

- Same codebase works for different sites with different machine layouts

- Easy to adjust calibration without recompilation

- Configuration serves as documentation of physical installation

Business Value & Impact

Operational Benefits

Real-Time Visibility

- Operations teams can see current stockpile volumes and locations instantly

- Machine operators understand where material is being deposited/reclaimed

- Reduces uncertainty and supports better decision-making

Accurate Inventory Management

- Precise tonnage calculations based on 3D volumetric modeling

- Material property tracking (iron content, moisture) throughout lifecycle

- Eliminates manual surveys and reduces inventory discrepancies

Historical Analysis

- Complete audit trail of all machine operations and stockpile changes

- Replay past operations to investigate issues or optimize workflows

- Trend analysis to identify patterns and opportunities for improvement

Technical Benefits

Maintainability

- Clean architecture with clear separation of concerns

- Comprehensive test coverage prevents regressions

- Well-documented codebase with inline comments and external documentation

Scalability

- Handles multiple stockyards with hundreds of machines

- Efficient algorithms scale to large voxel grids (1M+ voxels)

- Database rotation prevents unbounded growth

Extensibility

- Plugin architecture for new data sources (implement gRPC ingestor)

- Configurable algorithms (new deposition strategies)

- Multiple client support (web, API, future integrations)

Reliability

- Memory-safe Rust eliminates entire classes of bugs

- Comprehensive error handling prevents crashes

- Graceful degradation ensures continued operation under failure

Development & Deployment

Modern DevOps

- Automated build pipeline (Azure Pipelines)

- Code-signed Windows installer

- Service wrapper for Windows deployment

- Environment-based configuration for different environments

Observability

- OpenTelemetry integration for distributed tracing

- Structured logging with configurable levels

- Automatic log rotation and cleanup

- Metrics collection for performance monitoring

Security

- OAuth2 authentication for external APIs

- Secure credential management via environment variables

- No hardcoded passwords or tokens

- Read-only database mode for replay operations

Development Velocity

Rapid Feature Development

The clean architecture enables fast feature additions:

- Added ancillary data tracking (Fe%, moisture) in a single sprint by extending the voxel data structure and updating serialization

- New deposition algorithm (cone vs. settle) implemented as a strategy pattern, requiring no changes to calling code

- GE Proficy Historian integration built in parallel with Excel ingestor, demonstrating extensibility

Active Evolution

The comprehensive changelog documents continuous improvement:

- Version 0.1.x to 0.2.x - Major refactoring of voxel crate, improved error handling, new algorithms

- Regular releases - Multiple installer releases with incremental improvements

- Responsive to requirements - Features added based on operational needs (lifecycle management, validation modes)

Code Quality Metrics

Codebase Statistics:

- 9+ crates - Modular architecture

- 20+ executables and tools - Complete ecosystem including test tools, emulators, visualizers

- Comprehensive tests - Unit tests, integration tests, scenario-based tests

- Documentation - User guide, developer guide, API documentation

Conclusion

Stockpile Historian demonstrates mastery of:

- Systems Programming - Low-level performance optimization with high-level abstractions

- Distributed Systems - Event streaming, data pipelines, concurrent processing

- Domain Modeling - Complex industrial processes captured in clean code

- Software Architecture - Separation of concerns, design patterns, maintainability

- DevOps Practices - CI/CD, observability, deployment automation

- Professional Software Development - Testing, documentation, version control, iterative improvement

The project showcases the ability to build production-grade industrial software that balances performance, reliability, and maintainability. It’s a comprehensive example of how modern software engineering practices can be applied to solve real-world industrial challenges.

Project Links

- Repository: Indi.Stockpile.Historian (Private)

- Technology: Rust, gRPC, DuckDB, Apache Arrow, Tokio

- Domain: Industrial IoT, Time-Series Databases, 3D Volumetric Modeling

- Scale: Real-time processing, millions of voxels, continuous operation