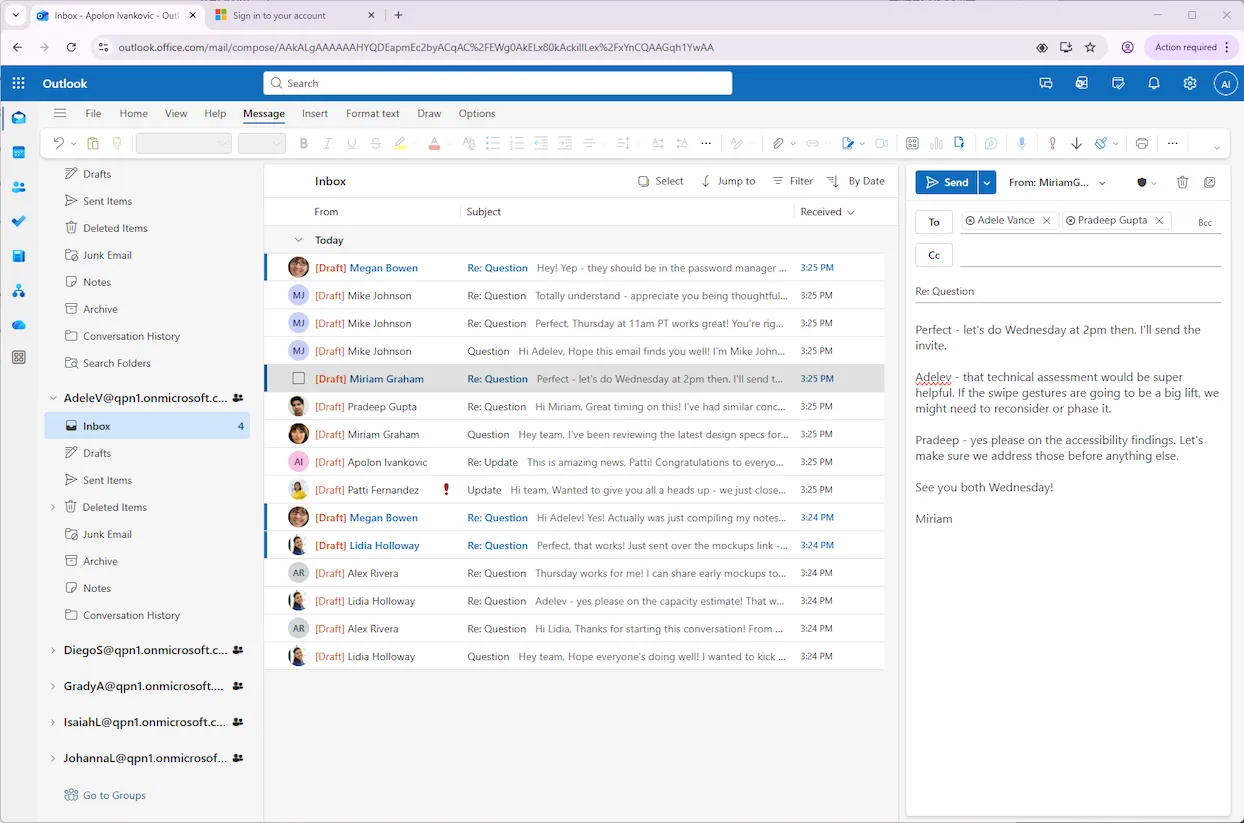

While building a simulated mailbox seeder utility for a Microsoft 365 development tenant, I ran into an interesting problem that highlights how deep research tools can complement coding agents. The utility generates fake emails and seeds them directly into mailboxes, allowing potentially destructive testing without risking production data. Using Cursor with Claude Opus and spec-driven development, I got the functionality working as expected. The simulated emails appeared in the correct form. But every single one was marked as draft.

Normally, without coding agents, this type of issue sends you down a research rabbit hole. You scour vendor documentation that may or may not cover your edge case. You dig through Stack Overflow, forums, and message boards looking for snippets of insight. You form hypotheses and test them, often hitting dead ends. A seemingly simple question like “how do I make emails non-draft when bypassing the normal send/receive cycle?” can consume hours or days. It’s necessary work, but it’s forensic research rather than straightforward programming.

I watched Claude Opus encounter this same research challenge. It attempted approaches similar to what I would try manually, but it wasn’t learning from failures. Without careful context management, it repeated the same unsuccessful attempts. I could have switched to human forensic mode and done the research myself, but I didn’t want to spend hours chasing down API nuances. Even with access to the dev tenant data, Opus kept getting caught in dev cycle loops.

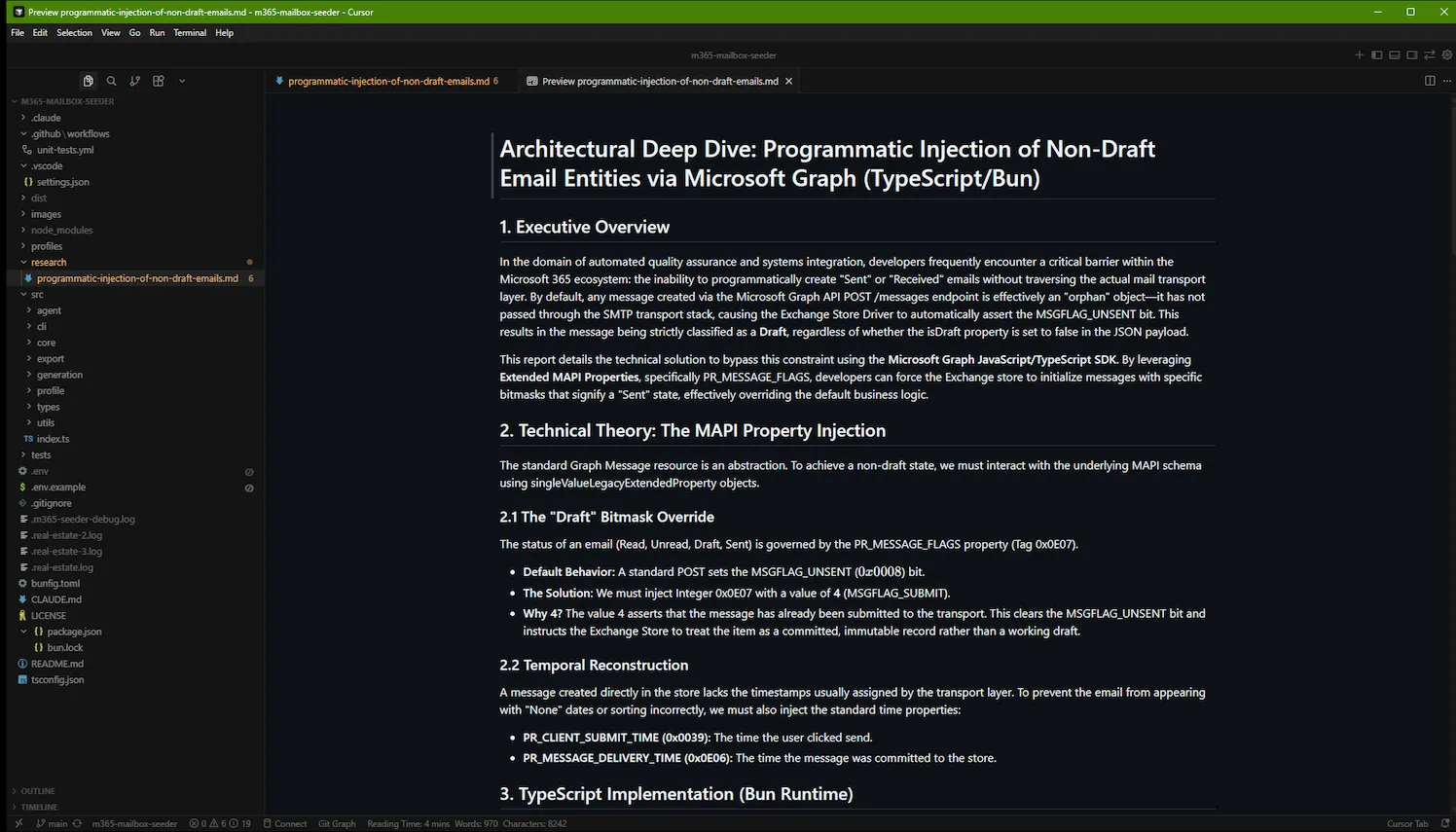

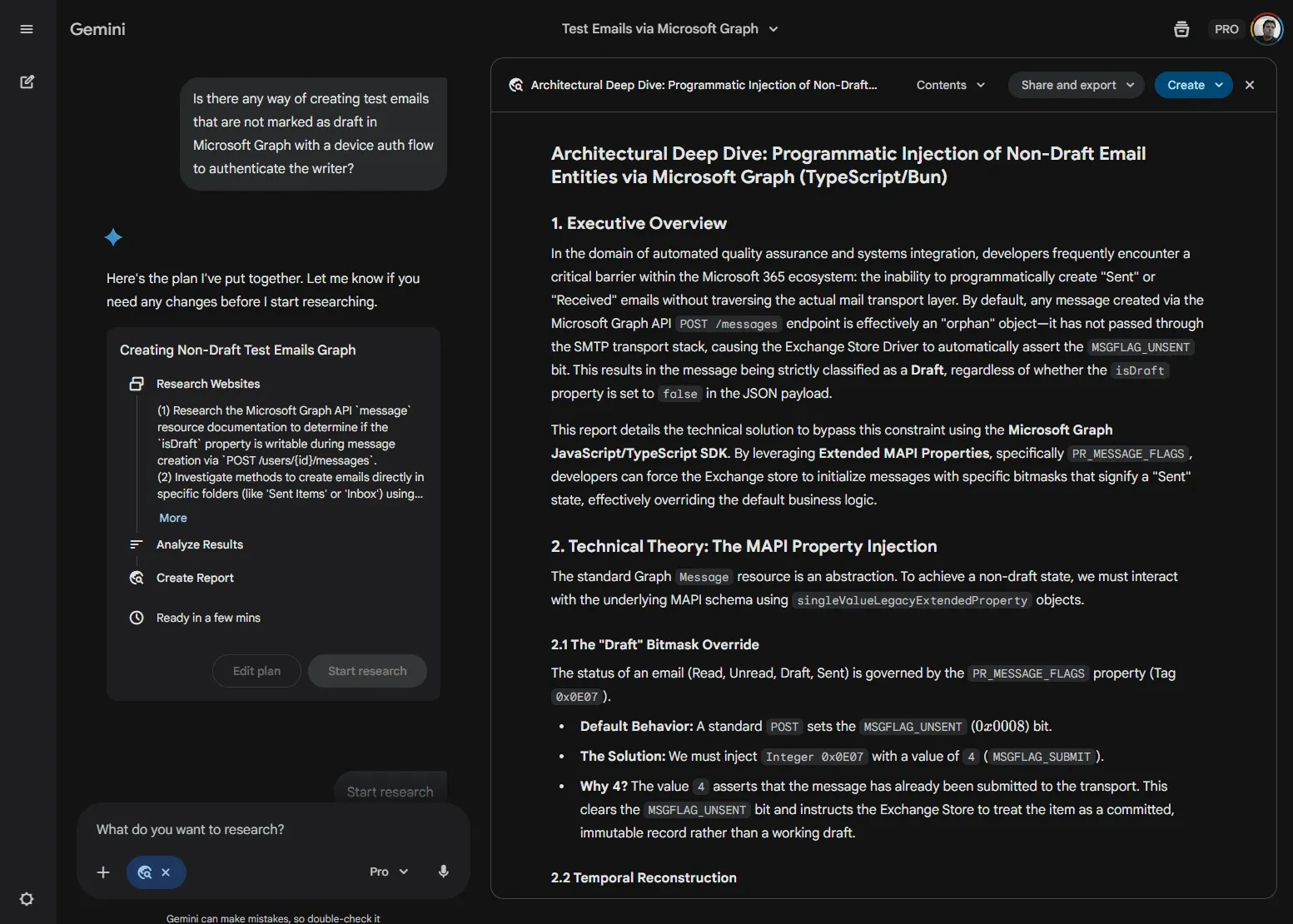

So I tried an experiment. I fed the problem to Gemini’s deep research tool. It performed its research and returned a comprehensive report. I converted that report to Markdown and added it to the repository as context. When I pointed Opus back at the development task with this new context available, it solved the problem immediately. No hassle.

I’ve now created a research subfolder at the repository root for storing non-trivial research results. These documents serve two purposes: they explain why certain approaches are taken in the code, and they provide context for future development work.

I’ve historically used the phrase “deployment is king” with development teams. Software means nothing unless it’s deployed. In the coding agent era, an adjacent principle carries equal weight: “context is king.” Storing deep research results in your repository is one practical way to ensure your coding agent has the context it needs to do the job you want it to do.