The Evolution of Remote Work Documentation

Back in 2001, I encountered what has become a defining challenge of remote development: establishing trust and visibility with clients across significant time zones. Working for a US environmental monitoring technology firm from Australia, I faced a fundamental constraint that initially seemed insurmountable… the traditional “visible presence” model of office work simply doesn’t translate when you’re operating with a 12-hour time difference. My initial approach of simply delivering quality code on schedule proved insufficient. Clients needed visibility into the development process itself.

The solution that emerged was deceptively simple: comprehensive daily email reports. Each day, I documented not just what I accomplished and hours worked, but the technical challenges encountered and, critically, how I resolved them. These reports included screenshots and detailed technical narratives that provided complete visibility into the development process. Looking back at this approach, what started as a necessity for building client trust evolved into something far more valuable (a searchable archive of technical decisions and problem-solving patterns). At the time, I viewed this documentation as overhead; experience has since shown it was among the most valuable artifacts we created.

When Torq Software expanded, I extended this reporting discipline across the entire team. The practice proved remarkably effective for nearly two decades. It’s worth noting that the system only began to falter post-COVID, when the industry-wide shift to remote work made maintaining the discipline for meaningful (rather than perfunctory) documentation increasingly difficult.

Modernizing Development Logs for the AI Era

Traditional timesheet systems, while functional for billing purposes, fundamentally miss what makes development documentation valuable. Having used and evaluated various time-tracking solutions over the years (from simple spreadsheets to sophisticated project management platforms), I’ve consistently found their brief note fields insufficient for capturing the nuanced technical context that actually matters. The constraint isn’t technical but conceptual: these systems optimize for billing granularity rather than knowledge preservation. In our current era of artificial intelligence and large language models, this limitation represents more than an inconvenience. It’s a missed opportunity for knowledge amplification.

The key insight here is that development logs containing screenshots, detailed narratives, and technical context effectively create an unstructured knowledge base that AI systems can navigate and understand. Tools like Cursor, Claude, and other coding agents don’t just search this information. They synthesize it to understand project evolution, technical decisions, and implementation patterns.

Recognizing this potential, I’ve recently revived my daily reporting practice with a deliberate AI-first approach. The transition wasn’t immediate. My initial attempts to modernize the email-based system proved clunky and inconsistent. Rather than email reports (which served their purpose well in their time), I now maintain an internal developer blog using Retype as the static site generator for some clients or projects. After evaluating several documentation platforms, Retype’s balance of simplicity and extensibility proved optimal for this use case. The system I use organizes Markdown files hierarchically by day, month, and financial year (a structure that balances human readability with machine parseability).

Integrating AI-Friendly Documentation Conventions

Through iterative refinement (and several false starts that taught me the importance of maintaining consistency), the system has evolved to incorporate several design decisions specifically optimized for AI interaction:

- Structured Markdown Format: Each day receives its own Markdown file, organized hierarchically by time periods (a convention that enables both granular access and broad temporal queries)

- Badge-Based Time Tracking: Leveraging Retype’s badge syntax (analogous to GitHub status badges), timesheet data embeds directly within the narrative documentation

- Agent Instructions: Dedicated

AGENTS.mdandREADME.mdfiles establish clear contracts with coding agents, specifying formatting conventions and processing expectations - Automated Processing: Purpose-built scripts parse the Markdown to generate timesheet reports and compliance documentation

To put this in perspective, the approach delivers on multiple dimensions simultaneously. It preserves the transparency and trust-building aspects that made the original daily reports valuable, while constructing a queryable knowledge corpus that AI systems can effectively navigate.

The Australian R&D Tax Incentive Use Case

One particularly compelling experimental application emerged when considering the Australian government’s R&D tax incentive program requirements. Having previously spent considerable time manually preparing these submissions, I recognized an opportunity to test whether systematic AI processing could streamline this complex task. By structuring daily development logs with AI processing as a first-class consideration, the system should theoretically be able to automatically generate the comprehensive documentation required for R&D claims. The approach involves having the LLM post-process the entire corpus of daily development narratives, transforming unstructured technical notes into the specific formats and detail levels that government assessors expect.

The true test of this automation won’t come until year-end when I submit the actual R&D application. Initially, I was skeptical that AI processing could match the nuanced understanding required for R&D classification. My background in industrial systems had made me cautious about automated analysis of complex technical work. Where I once spent days manually reviewing months of development artifacts to identify qualifying activities and technical innovations, the AI-augmented approach promises to accomplish this in minutes while potentially maintaining higher consistency and completeness. The key insight driving this experiment is that the AI isn’t replacing human judgment but should amplify the structured information I’m already capturing. Whether this theory holds up in practice remains to be seen when the assessors review the submission.

A Micro-Example of AI-Assisted Development

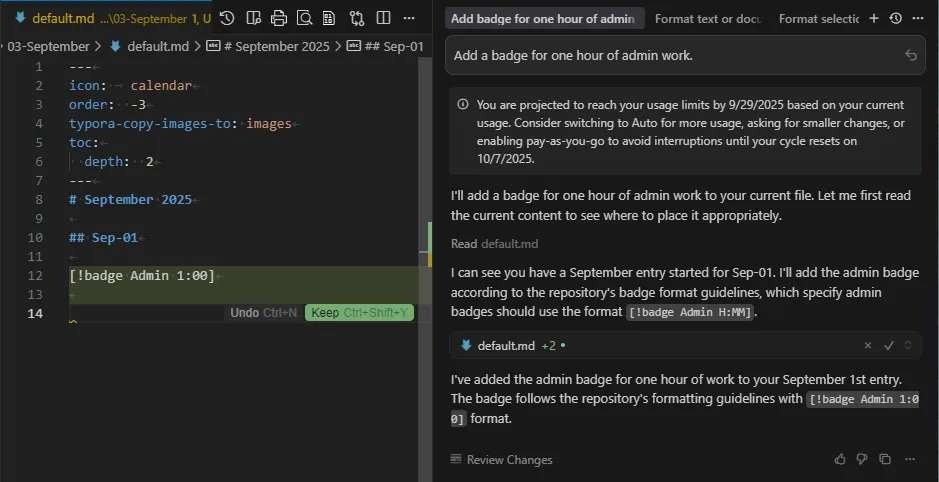

A recent experience crystallized the practical value of this AI-integrated approach. After a brief project hiatus, I returned to find my memory of the specific badge syntax had faded (a common occurrence when working with multiple documentation systems across different clients). My instinct was to fall back on the traditional approach of manually searching through documentation or scrolling through previous examples, but I caught myself and decided to test the AI integration instead. I simply queried my coding agent: “How do I add a badge for one hour of admin work?”

The AI parsed my AGENTS.md file and provided the precise syntax. This micro-interaction (taking mere seconds) illustrates something profound about how our relationship with development environments is evolving. As Andy Hunt and Dave Thomas discuss in “The Pragmatic Programmer,” reducing friction in common tasks has compounding effects on productivity.

The Desktop Publishing Analogy

This interaction triggered a connection to the desktop publishing revolution I witnessed in the 1980s (a transformation I initially underestimated at the time). When laser printers and page layout software democratized professional document production, both quality and volume increased by orders of magnitude. Having lived through that transition, I remember being initially focused on the obvious benefits (faster document creation, better typography) while missing the more profound implications for information distribution and professional communication. Yet, as quality expectations rose in tandem, many practitioners (myself included) failed to appreciate the true scale of the transformation they were experiencing.

I’m convinced we’re navigating a similar inflection point with AI assistance in software development. These incremental improvements (instantly recalling documentation conventions, synthesizing context from disparate sources, generating boilerplate with correct patterns) compound into substantial productivity multipliers in ways that become apparent only through sustained use. The challenge, as with desktop publishing before it, is that our quality expectations recalibrate so quickly that we risk underestimating the magnitude of change. Looking back at my initial skepticism about AI coding assistance just eighteen months ago, I’m struck by how rapidly my own expectations evolved once I began systematic integration.

The Future of AI-Integrated Business Software

The interaction patterns emerging from modern coding agents point toward a broader transformation in business software design. Cursor’s implementation particularly stands out. AI suggestions appear as highlighted proposals requiring explicit confirmation, establishing a clear contract between human intent and machine assistance.

In practice, Cursor’s interface presents AI-generated modifications in distinctive green highlighting, accompanied by confirmation prompts: “Is that what you meant?” This preserves user agency while delivering the benefits of AI augmentation. The pattern (suggest, highlight, confirm) represents a design primitive that could transform forms processing, data entry workflows, report generation, and virtually every interaction point in business applications. Having implemented similar human-in-the-loop patterns in industrial SCADA systems at Torq Software, I’ve seen firsthand how this balance between automation and human oversight creates both efficiency gains and maintains critical quality controls.

Beyond Predefined Interactions

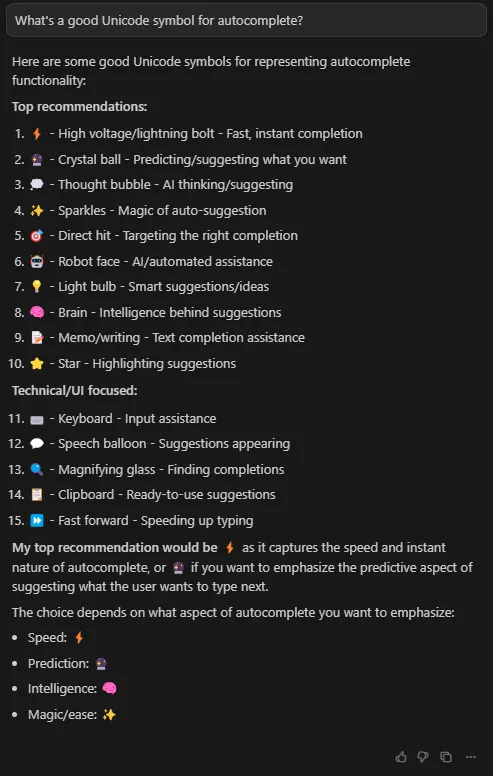

What truly distinguishes general-purpose AI integration is its capacity to assist with tasks that were never explicitly anticipated in the system design. A telling example occurred during documentation work when I needed a Unicode symbol to represent autocomplete functionality. My initial instinct was to search through character maps or Unicode reference sites (the approach I’d used for years). Instead, I simply asked the AI: “I’m looking for a Unicode symbol that represents the concept of autocomplete.”

The AI presented several semantically appropriate options, allowing me to select the most suitable one for the context. What struck me about this interaction was its naturalness. I wasn’t navigating hierarchical menus or parsing reference documentation, but engaging in something closer to collaborative problem-solving. This type of assistance (instantly available for virtually any knowledge domain) represents more than an incremental improvement. It’s a fundamental shift in how we conceptualize tool support.

Implications for the Future of Work

These micro-improvements in daily workflows, when aggregated across the entire spectrum of knowledge work, point toward transformative changes in how we approach professional tasks. The challenge I’ve observed repeatedly is conveying the magnitude of this shift to those who haven’t deeply integrated AI assistance into their workflows.

Many professionals have experimented with ChatGPT or similar tools, but often through isolated interactions that fail to demonstrate the compound value of systematic integration. I made this mistake initially. I was treating AI as an occasional research tool rather than as infrastructure for knowledge work. My experience suggests that the real gains emerge not from occasional AI consultation, but from architecting workflows where AI assistance is woven into the fabric of daily practice. The transition requires rethinking fundamental assumptions about how information is structured, accessed, and synthesized. The question of optimal interface design for AI-augmented business software remains an open and actively evolving challenge.

Looking ahead, I expect we’ll internalize these improvements much as we now take desktop publishing, spell-checking, or internet search for granted. These are revolutionary capabilities that have become invisible through ubiquity. The opportunity lies in recognizing and deliberately shaping this transformation while we can still perceive its contours. As Peter Drucker noted about knowledge work, the greatest productivity gains come not from working harder, but from fundamentally rethinking how work gets done.