June 2015 focused on analyzing Google’s Project Soli announcement and exploring how radar-based gesture recognition could integrate with visual touch concepts to create more robust multimodal interaction systems.

Google Project Soli Analysis

Google’s Project Soli announcement—using miniaturized radar chips for gesture recognition—represented a significant development in interaction technology. The radar approach offered advantages over optical systems: immunity to lighting conditions, ability to detect sub-millimeter finger movements, and potential for very compact form factors.

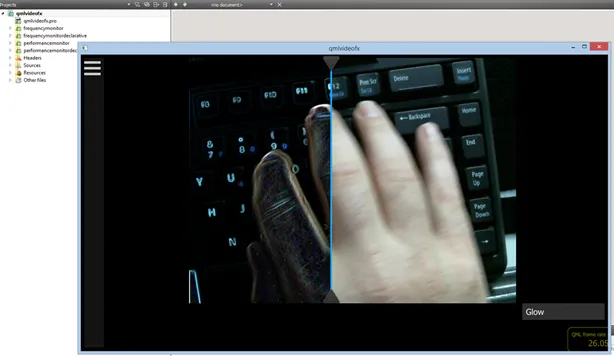

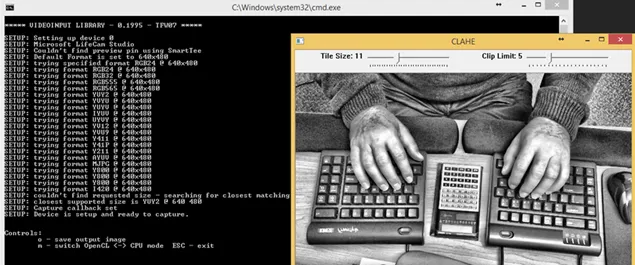

Detailed analysis of available information revealed potential synergies with visual touch concepts. The key insight: visual touch could serve as a calibration and training mechanism for radar gesture recognition systems. By providing ground truth data about precise hand and finger positions, visual touch could help train the machine learning pipelines that interpret radar signatures.

This represented an innovative integration pathway—using one sensing technology to enhance another rather than treating them as competing approaches.

The combination could potentially offer radar’s robustness with visual touch’s interpretability.

The combination could potentially offer radar’s robustness with visual touch’s interpretability.

Augmented TV Concept Refinement

Work continued on the augmented TV concept document, focusing on retrofitting visual touch interfaces for existing audiovisual devices. The challenge: how to add modern touch-based interaction to TVs, set-top boxes, and other devices that weren’t designed for it.

The augmenter concept emerged: an intermediate device that sits between video sources and displays, overlaying visual touch interfaces on any video stream. This architecture would work with legacy devices without requiring their modification.

Design Drawing Feedback Cycle

Feedback from the graphic design supplier (Sentient) on initial design drawing work led to refinement iterations. Translating technical concepts into clear visual representations that could serve both patent applications and marketing materials required careful collaboration between engineering and design perspectives.

Trademark and Branding Resolution

Following legal advice that “Visual Touchscreens” and “Vizual Touch” were too descriptive for trademark protection, the Visaptic name was registered as a trading name. This provided a distinctive brand that could be trademarked while allowing “visual touch” to remain as descriptive terminology for the technology itself.

The trademark research and registration process proved more complex than anticipated, with IP Australia’s web application experiencing technical issues during submission. The experience highlighted the administrative overhead of establishing proper intellectual property protection for innovative technologies.

Multimodal Interaction Vision

The month’s work crystallized a comprehensive vision for multimodal interaction combining:

- Visual touch: Precise hand and finger position tracking with visual feedback

- Eye gaze: Rapid region-of-interest identification

- Radar gestures: Robust, lighting-independent gesture recognition

- Capacitive touch: Physical contact confirmation and pressure sensing

Each modality complemented the others’ limitations. Eye gaze quickly identified where the user was looking, visual touch provided precise positioning within that region, radar offered sub-millimeter gesture detection, and capacitive touch confirmed actual contact. Together, they could create interaction experiences more natural and accurate than any single technology.

The convergence of these technologies—some developed independently by major companies like Google and Tobii—suggested the timing was right for integration work that combined their strengths. The challenge shifted from proving individual technologies to architecting systems that leveraged multiple modalities in coherent, user-centered ways.

The R&D phase of Visual Touchscreens had explored depth sensors, fisheye cameras, eye trackers, and now radar gestures. Each experiment built understanding of possibilities and limitations. The path forward involved synthesizing these learnings into integrated systems that delivered on the original vision: making touch interaction available wherever users needed it, unconstrained by physical touch surfaces.