January 2015 brought experimentation with ultra-wide-angle fisheye lenses and alternative camera approaches, exploring whether extreme fields of view could solve the proximity sensing challenges inherent in visual touch systems.

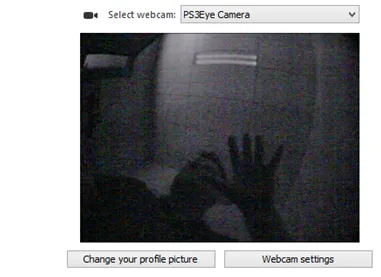

PS3 Eye Camera Experimentation

Research into low-cost camera options led to purchasing PS3 Eye USB camera boards with M12 lens mounts for prototyping. These consumer gaming cameras offered acceptable resolution and frame rates while being easily hackable—the M12 mount allowed lens replacement, a crucial requirement for experimentation.

Two M12 super fisheye lenses (190 degrees field of view) were ordered from China. Managing international purchases required accounting for bank fees that had caused issues in previous transactions. When the lenses arrived, experiments began immediately with both Duo3D (using M8 mount) and PS3 Eye cameras (using M12 mount).

The M8 lens width actually caused slight deformation of the Duo3D board during mounting—a reminder that physical integration challenges matter as much as optical performance. Getting both camera systems operational with the ultra-wide lenses confirmed mechanical compatibility but revealed optical limitations.

Fisheye Lens Results

Testing the 190-degree fisheye configurations with various touch panel sizes yielded mixed results. The extreme field of view did allow more finger touch points to remain visible even when very close to the touch surface. However, practical limitations emerged:

- For 10-inch panels, the fisheye approach wasn’t practical

- 7-inch panels showed more promise but remained marginal

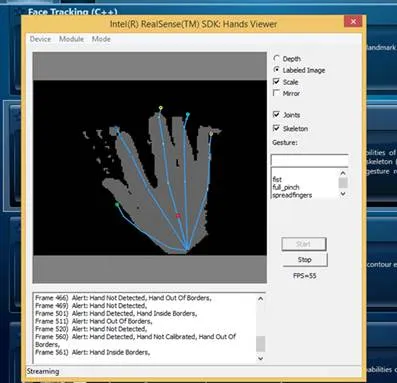

- Image processing for thumb and finger detection at such close range proved difficult

- The PS3 Eye cameras required external IR illumination (provided by a Leap Motion device) due to image darkness

These experiments confirmed that while fisheye lenses offered theoretical advantages, practical implementation faced significant image processing challenges. The extreme distortion and reduced resolution at frame edges complicated finger detection algorithms.

Touch Panel Sourcing

Interactions with Fannal Electronics explored sourcing touch panels closer to 16:9 aspect ratios—more suitable for TV and monitor integration scenarios. Questions about USB converter options and Windows driver availability reflected ongoing efforts to find hardware components that could simplify integration rather than requiring low-level driver development.

USB-to-I2C adapter research with team members explored how to interface with touch controllers at the protocol level, providing flexibility when off-the-shelf USB solutions weren’t available.

HDMI Stick PC Platform

A new hardware platform arrived: an HDMI stick PC with Intel Atom processor running Windows. This ultra-compact form factor represented potential for embedded visual touch applications. Setup involved resolving Windows activation issues (requiring an MSDN license instead of the expected Windows 8 with Bing) and installing necessary updates.

The HDMI stick form factor suggested interesting integration possibilities—a complete Windows PC that could hide behind a display, powered entirely through HDMI connectivity.

This could enable visual touch functionality without requiring external computers.

This could enable visual touch functionality without requiring external computers.

Visual Touch Typing Exploration

Research into the Kinesis Advantage keyboard series sparked a new concept direction: visual touch typing. Specialized ergonomic keyboards had steep learning curves that might be eased through visual overlay systems showing hand positions and proper finger placement. This represented a different application of the core visual touch technology—not replacing keyboards but augmenting them with real-time visual feedback.

Hover Touch and Advanced Sensor Research

The search for better sensing technologies continued. Hover touch capabilities in smartphones—particularly Chinese manufacturers following Samsung’s lead—were investigated. While these phones offered hover detection, sourcing development hardware and documentation proved challenging.

RGBD (color + depth) and RGBI (color + infrared) sensor technologies represented the ideal solution direction. Companies like Omnivision were developing relevant chips, and projects like Google’s Project Tango and MV4D showed industry movement toward integrated 3D sensing. However, none of these advanced sensors were available as evaluation kits for independent developers.

Subscriptions to relevant blogs (like Double Aperture) and company feeds (like Fitlet’s embedded Intel PC) became part of tracking sensor technology evolution. The long-term strategy required staying aware of what would become available in 12-24 months, even if current prototypes relied on today’s less-ideal solutions.

Leap Motion Teardown

Hands-on hardware understanding led to attempting a Leap Motion disassembly based on teardown videos. This required specialized tools (a #00 Phillips screwdriver from a jeweler’s set) but offered insights into the device’s internal construction. Understanding how existing gesture recognition products were engineered informed thinking about custom hardware design.

The month demonstrated continued hardware exploration across multiple parallel tracks: ultra-wide-angle optics, compact PC platforms, keyboard augmentation concepts, and advanced sensor technology monitoring. Each experiment revealed both possibilities and practical limitations, building knowledge that would inform eventual product decisions.