March 2014 brought significant progress on both the software and hardware fronts of the Visual Touchscreens project. The month began with a critical performance challenge and ended with working physical prototypes demonstrating the depth-sensing touch concept.

Solving the Frame Rate Problem

The search for adequate rendering performance led through several dead ends before finding the right solution. Initial experiments with Direct3D looked promising, leading to the purchase of technical references including “Direct3D Rendering Cookbook” and “Introduction to 3D Game Programming with DirectX 11.” However, after reviewing the material, the learning curve for DirectX programming appeared excessive given the project timeline. If a simpler approach could deliver the needed frame rates, it would be preferable.

Further SharpDX 2D experiments revealed disappointing results—only about 10 frames per second for full-resolution rendering. This explained why earlier attempts had failed to achieve responsive performance. Various Win32 functions like PatBlt were tested for large window rendering, but results remained poor. DirectShow and shader-based approaches were considered but abandoned due to complexity.

The breakthrough came from an unexpected source. WPF’s WriteableBitmap, used in existing MacroView desktop code, offered a path forward. Despite initial slowdowns from WPF inexperience, experimentation with a white noise generator achieved an impressive 140 frames per second for full-screen rendering. This was exactly the performance needed.

The Depth Camera Tablet application was rebuilt using WPF, achieving frame rates comparable to the white noise tests while maintaining the full functionality of the Windows Forms version. The performance problem was solved.

Building Physical Prototypes

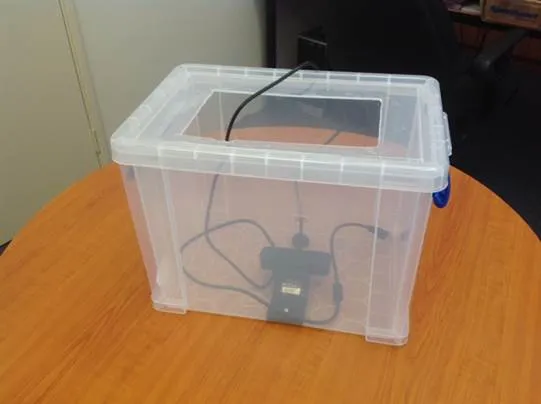

With working software in hand, attention turned to physical prototypes. When a commissioned polycarbonate enclosure fell through, improvisation became necessary. Trips to local hardware and office supply stores yielded the components for a functional prototype: a plastic container modified to hold a depth camera beneath a capacitive touch panel.

This hands-on approach revealed immediate design insights. Positioning the depth camera lens directly in the center of the touch panel rather than off to one side proved crucial. However, testing also showed that the camera couldn’t see hands near the edges of the touch surface when mounted too close. A calibration system would be necessary, along with increased distance between camera and surface.

Multiple prototype iterations followed, each incorporating lessons from the previous version. Additional touch panels were ordered after one cracked during disassembly. A deeper enclosure was constructed to provide more working distance for the depth camera’s field of view.

Software Architecture Refinement

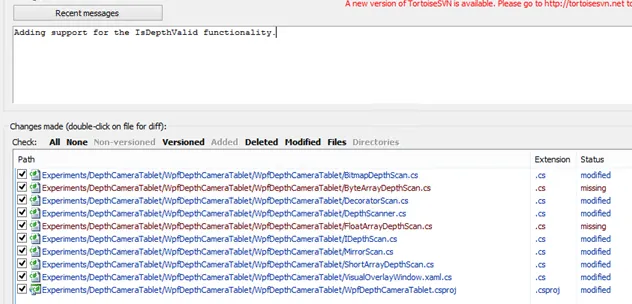

The software side saw significant architectural improvements. An IDepthScan interface was introduced to abstract depth data access, with implementations for both bitmap-based and direct array-based data sources. This separation allowed the application code to work independently of how depth data was obtained.

New scan implementations emerged from this architecture: ShortArrayDepthScan for direct depth array access, and DecoratorScan with MirrorScan for image transformations. These abstractions proved valuable as development progressed, though working with the actual depth values presented challenges. The Intel SDK’s depth measurements seemed inverted and didn’t match documented millimeter units. Various approaches to depth-to-color mapping were tried, but inconsistencies in the data made interpretation difficult.

The Calibration Challenge

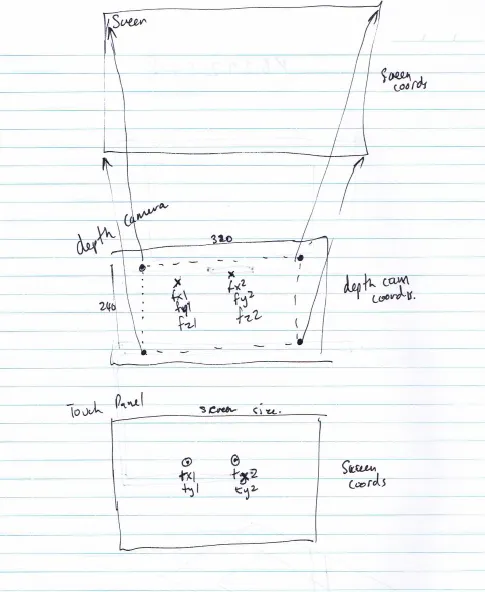

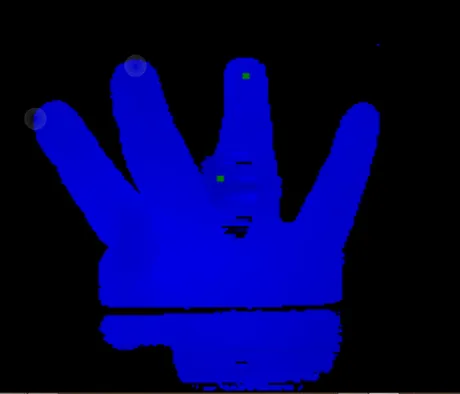

Touch event processing in WPF became the focus mid-month, leading to a rework of the overlay window architecture. Adding calibration support revealed a complex problem: mapping between the depth camera’s view space and the touch panel’s coordinate system.

Linear algebra calculations were implemented to perform the coordinate transformations. The calibration procedure involved recognizing two finger points followed by two touch events to establish the mapping. Initial results were promising—the rectangle was mostly correct, except for height calculations in the Y direction.

Gesture recognition proved unreliable, likely due to infrared reflections off the touch panel glass. An alternative approach using WPF’s manipulation APIs was attempted but consumed significant time without success. The final solution used a visual calibration method: touching to freeze the scanned image, then using mouse clicks to define the mapped rectangle. Calibration results were persisted to isolated storage for reuse across sessions.

Camera Positioning Experiments

Late in the month, experiments tested mounting the depth camera above the surface rather than below it. This configuration produced interesting effects where the infrared illumination created an elliptical pattern on the surface due to reflection angles. Attempts to filter out the surface by rejecting certain depth ranges led to two problems: either the surface remained visible, or depth resolution was insufficient to distinguish between hands close to the surface and the surface itself.

These experiments confirmed that despite reflection challenges, positioning the camera below a transparent touch surface remained more effective for demonstrating the visual touch concept than a top-mounted configuration.

Professional Prototyping

As the software matured, professional prototype development began. A cardboard mockup documented dimensions for a plastics fabrication company to produce an acrylic frame. When the first prototype arrived with issues deviating from specifications, modifications were requested. Experimentation determined that a height of 220mm between camera and panel provided the optimal range for hand recognition without depth sensor limitations.

The month closed with both software and hardware components coming together into a coherent demonstration of the visual touch concept. Frame rate performance was solved, calibration was functional, and physical prototypes were evolving toward a professional presentation. The technical foundations were in place for the next phase of development.