February 2014 marked an intensive exploration phase for the Visual Touchscreens project, focusing on identifying the right sensor technology to enable touch interaction through depth perception rather than traditional capacitive methods.

Evaluating the Sensor Landscape

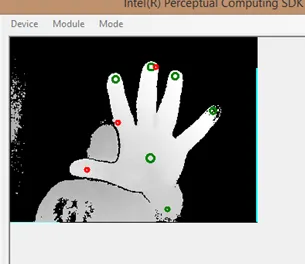

The month began with experiments using the Intel Perceptual Computing SDK paired with a Creative sensor. While the hardware showed promise, the gesture functionality relied entirely on licensed technology from SoftKinetic—technology already tested with similar limitations and performance characteristics. This revealed a pattern that would recur throughout the research: many sensor solutions shared underlying technology, limiting the available options more than initially apparent.

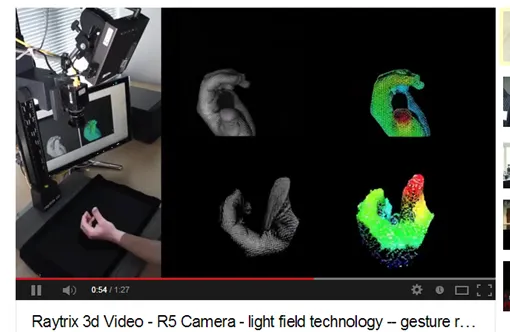

The search expanded to alternative approaches. Light field camera technology emerged as a particularly interesting avenue. Pelican Imaging’s 4x4 camera array caught attention for its potential in capturing depth information through multiple perspectives. Contact was made regarding evaluation kits, though the path to obtaining hardware for experimentation proved challenging. Similar inquiries went to Ratrix for their 3D light field cameras, though pricing discussions quickly revealed these solutions targeted high-end industrial applications rather than prototype development.

Lytro’s consumer-focused light field camera was also evaluated, but its emphasis on end-user photography rather than developer access made it unsuitable for the technical requirements at hand.

The Hardware Procurement Challenge

One surprising aspect of hardware R&D became apparent: sensor manufacturers primarily engage with high-volume customers. Attempts to contact specialized sensor suppliers revealed an industry focused on bulk orders from manufacturers, with little infrastructure for supporting independent innovation or small-scale experimentation. Patent mentions, rather than opening doors, often closed them as companies perceived legal rather than business opportunities.

Despite these barriers, several promising acquisitions were made. A Structure IO sensor was pre-ordered for future experimentation. Capacitive touch panels were sourced from vendors for integration testing. Even Microsoft’s Pixelsense table was investigated as a proof-of-concept platform, though accessibility and cost made it impractical for prototyping.

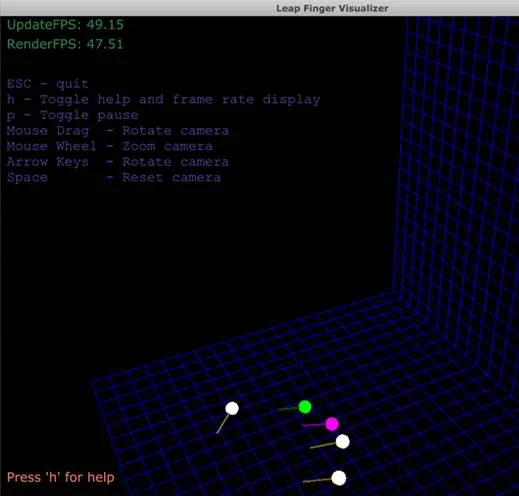

Hands-On with the Leap Motion

Mid-month brought hands-on experimentation with the Leap Motion sensor. The device performed better than expected, offering impressive hand tracking capabilities. However, it exhibited sensitivity to placement and distance that would require careful consideration in any final design. The sensor’s ability to track fingers and thumbs at close range made it a viable candidate for the visual touch concept, even if it wasn’t originally designed for this application.

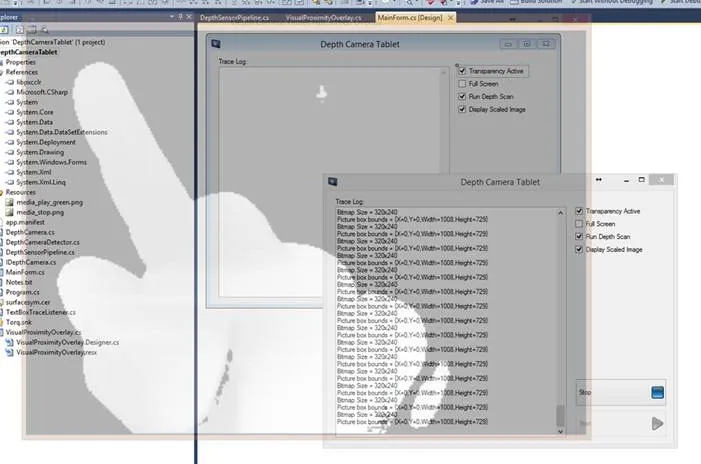

Building the Depth Camera Tablet Application

With sensor evaluation underway, development shifted to creating software to demonstrate the visual touch concept. The goal: a full-screen transparent overlay that could display depth map data in real-time, allowing users to see their hands interacting with the touch surface.

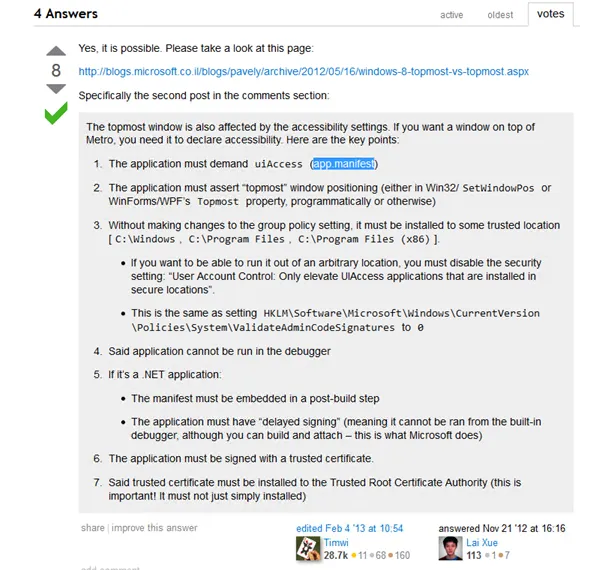

The initial Windows Forms implementation faced immediate challenges. Creating a transparent, topmost window that could pass through mouse and keyboard events required careful use of Win32 window styles, specifically the WS_EX_TRANSPARENT setting. Getting this overlay to work across both desktop and Windows 8 Metro modes added another layer of complexity.

The first working version successfully displayed depth information, but performance told a different story. Frame rates weren’t sufficient to create the smooth, responsive experience needed to demonstrate the concept effectively. The bitmap-based data transfer from the Intel SDK proved to be a bottleneck.

The Performance Quest

The pursuit of better performance led down several technical paths. Direct access to raw depth data arrays from the Intel SDK seemed promising, but poor documentation made this approach frustrating. The SDK examples worked fine, but deviating from their exact patterns produced confusing results.

Attention turned to graphics APIs. SharpDX experimentation showed that high-speed rectangle drawing was achievable—randomly generated rectangles rendered at excellent frame rates. However, when connected to actual depth map data through GDI bitmap transfers, performance dropped significantly. This unexpected discrepancy suggested the data transfer mechanism itself was the problem, not the rendering.

Windows Forms’ event loop incompatibility with SharpDX’s RenderForm class led to the decision to potentially rebuild the application entirely around SharpDX. Sometimes in R&D, the path forward requires taking a step back.

The month closed with a clear understanding: the right sensor technology existed, but integration and performance optimization would require continued iteration. The Depth Camera Tablet concept was technically viable, but the journey from proof-of-concept to fluid demonstration still had obstacles to overcome.